The key to successfully leveraging artificial intelligence (AI) in healthcare rests not wholly in the technical aspects of predictive and prescriptive machines but also in change management within healthcare organizations. Better adoption and results with AI rely on a commitment to the challenge of change, the right tools, and a human-centered perspective.

To succeed in change management and get optimal value from predictive and prescriptive models, clinical and operational leaders must use three perspectives:

1. Functional: Does the model make sense?

2. Contextual: Does the model fit into the workflow?

3. Operational: What benefits and risks are traded?

Download

Download

This report is based on a webinar presented by Jason Jones, PhD, Chief Data Scientist at Health Catalyst February 27, 2019, titled, “3 Perspectives to Better Apply Predictive & Prescriptive Models in Healthcare.”

Artificial intelligence (AI) is a hot topic in health and healthcare today. Most of the focus has been on the technical challenges of building and deploying predictive and prescriptive models. While there is always room for improvement, algorithms have advanced to a point that allows us to focus on the bigger challenge: change management. By acknowledging that change is hard, “tooling up,” and maintaining a human-centered perspective, we can achieve better adoption and results with AI.

Predictive and prescriptive models can enhance health and healthcare, which will ultimately help improve Quadruple Aim outcomes: population health, patient experience, reduced cost, and positive provider work life. However, successful adoption of predictive and prescriptive models heavily depends upon behavior change, which requires more than technical accuracy.

While predictive algorithms abound, tools to facilitate change management remain scarce. This article will help clinical and operational leaders obtain value from predictive and prescriptive models using three perspectives: functional, contextual, and operational. Investing time and effort to ensure these three levels of model understanding is necessary for broad-scale AI adoption.

Healthcare leaders currently delving into AI must understand what predictive and prescriptive models are and how they differ:

Healthcare leaders and data scientists have many predictive and prescriptive modeling resources available, including some free clinical prediction models. In fact, these resources teach healthcare professionals that developing predictive models is no longer a question of technical aptitude but whether a health system is prepared to deploy them.

The first question clinical and operational leaders need to answer is, “What are we trying to achieve?” Algorithms and predictive models are commodities employed to achieve specific outcomes. A famous quote by musician George Harrison applies to this concept: “If you don’t know where you’re going, any road will take you there.” The same can be said about predictive and prescriptive models. It’s important to know the desired outcome before setting out to build a predictive model.

Healthcare leaders often disagree over the best course of action to affect change within an organization. To successfully adopt AI technologies, frontline leaders can use a framework for change management that sets expectations and facilitates productive dialogues to encourage consensus and buy-in. The framework includes three levels of understanding:

Once healthcare leaders have the three-level framework for change management, they can begin to deploy predictive and prescriptive models. These three levels of understanding create the framework for change management that enables successful deployment and adoption of predictive and prescriptive models. Attaining functional, contextual, and operational understanding helps ensure that teams don’t lose track of the problem they’re trying to solve and bolsters their confidence within the organization to move decision makers toward agreement.

To demonstrate, this article will examine three examples of how teams can move through the three levels of understanding.

In the first example, an analytics teams wants to build a predictive model that determines which patients in the emergency department (ED) are at greatest risk for progressing to severe sepsis or septic shock within 24 hours.

In functional model understanding, the team might begin by asking questions to achieve a basic understanding of how the model should work:

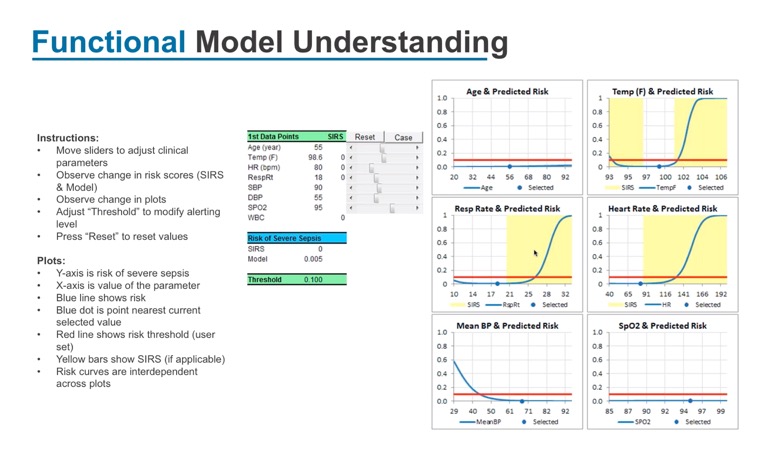

The analytics team needs help answering these questions; they need input from clinicians to build a model that makes sense. Figure 1 illustrates a sample model for predicting whether a patient will progress to severe sepsis or septic shock within a 24-hour period, using six key parameters. In this example model, the patient’s risk of developing sever sepsis or septic shock moves up or down based on how the parameters change.

Clinician input and understanding are key to getting the healthcare organization to embrace and adopt this model down the road. Data analysts and data scientists need to make the predictive model transparent, so clinicians understand how it works, and, in turn, support the model—a critical step for adoption.

George Box, a British statistician, famously said, “All models are wrong. Some models are useful.” The team is trying to build a useful model, and the utility will not just be how well it predicts the event, but what somebody does with the newly discovered information. Obtaining functional model understanding is the first step.

Taking the Sepsis example above, obtaining contextual model understanding requires the team to ask a different set of questions:

Once the team has created the predictive model and gains a functional understanding, they need to analyze the model in a contextual sense, starting with whether the model is identifying the right patients at the right time. If the model identifies a patient who is at risk for severe sepsis or septic shock but fails to identify him until after he’s in critical care, the model is not useful.

The next question the team might ask for contextual understanding is, “Who’s going to take action?” Should a phlebotomist draw a lab with an automated order? Should the patient receive fluids earlier? Figure 2 depicts an example I-Chart that provides details leading up to an incident that can be used for contextual model understanding.

To obtain contextual model understanding, the team would look at patients who may be at risk for severe sepsis or septic shock. The I-Chart provides additional information about diagnoses, comorbidity, and recent clinical encounters (e.g., whether the patient had recently been in an ambulatory setting). The chart shows the first 24 hours, from the time the patient checks into the ED, and the six parameters identified above by the analytics team in functional model understanding. This allows the team to understand the complete context for this patient in relationship to the predictive model. With this information, and the questions they ask in the proper context, the team can make adjustments to improve the model’s utility and further gain clinician buy-in.

Using a different example to demonstrate operational model understanding, the team wants to identify patients who will develop congenital heart disease (CHD). Unlike the sepsis example, the team is not limited to events in a specific set of hours or days but decades worth of information. In building this model, the team will want to consider the following questions:

To build a useful model, the team will not only need to think about which patients might develop heart disease over the next decade but also what to do with that information. One possible action is to prescribe a statin for the patient. Prescribing a statin is a relatively low-intensity action but also comes with possible side effects and associated costs. Operational model understanding compels teams to examine the cause and effects and weigh the tradeoffs that often come with medical decision making.

If the team’s goals for this model are to capture 50 percent of patients who develop CHD in the next 10 years and, for those patients identified, have 50 percent of statin prescriptions associated with CHD, how can they set expectations appropriately for the tradeoffs they’re making? Can they gain clinician and leadership buy-in for a model that would benefit 50 percent of identified patients? If not, how can they modify the model to get the buy-in they need for adoption? This type of organizational dialogue and value balancing facilitates operational model understanding.

To successfully adopt predictive and prescriptive models, most healthcare organizations will do the heavy lifting in change management and efforts to overcome organizational inertia. The analytics teams can leverage three specific tool sets to facilitate the change management process: functional understanding, contextual understanding, and operational understanding. With these three tools, teams are more likely to successfully deploy predictive and prescriptive models.

An important part of the success of this model is ongoing collaboration, along with the goal of creating something that everyone (from data scientists to clinicians) can understand, trust, and utilize. Successful deployment ultimately depends on the usefulness and level of adoption of the model.

Would you like to learn more about this topic? Here are some articles we suggest:

Would you like to use or share these concepts? Download the presentation highlighting the key main points.